Behind the Scenes: Our ML Lab

In our latest article, we dive into the exciting world of Rask AI's lip-sync technology, with guidance from the company's Head of Machine Learning Dima Vypirailenko. We take you behind the scenes at Brask ML Lab, a center of excellence for technology, where we see firsthand how this innovative AI tool is making waves in content creation and distribution. Our team includes world-class ML engineers and VFX Synthetic Artists who are not just adapting to the future; we're creating it.

Join us to discover how this technology is transforming the creative industry, reducing costs, and helping creators reach audiences around the world.

What is Lip-Sync Technology?

One of the primary challenges in video localization is the unnatural movement of lips. Lip-sync technology is designed to help synchronize lip movements with multilingual audio tracks effectively.

As we have learned from our latest article, the lip syncing technique is much more complex when compared to just getting the timing right – you will need to get the mouth movements right. All words spoken will have an effect on the speaker's face, like "O" will obviously create an oval shape of the mouth so it won't be an "M", adding much more complexity to the dubbing process.

Introducing the new Lip-sync model with better quality!

Our ML team has decided to enhance the existing lip-sync model. What was the reason behind this decision, and what's new in this version compared to the beta version?

Significant efforts were made to enhance the model, including:

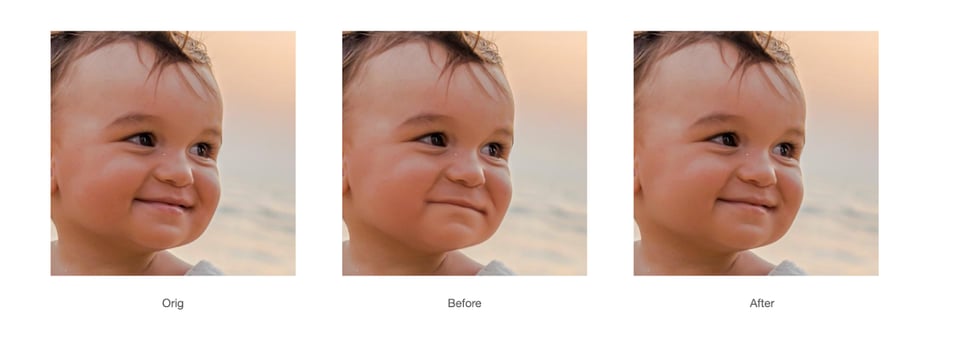

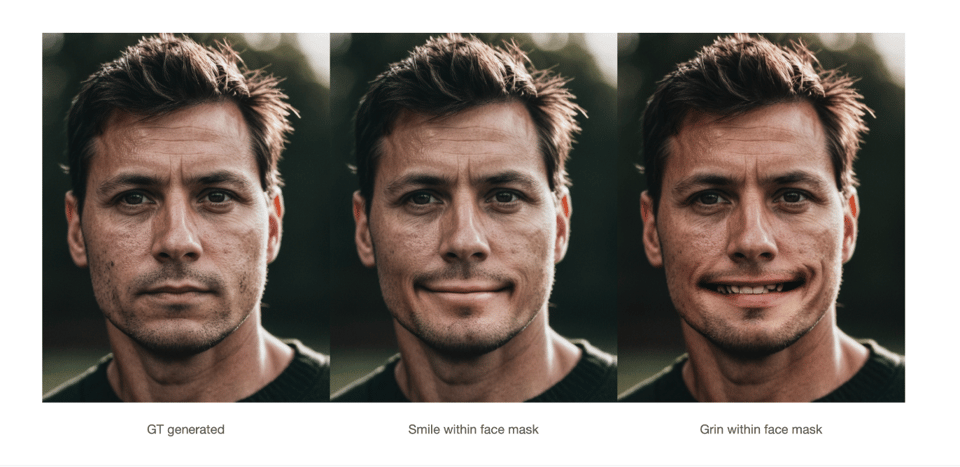

- Improved Accuracy: We refined the AI algorithms to better analyze and match the phonetic details of spoken language, leading to more accurate lip movements that are closely synchronized with the audio in multiple languages.

- Enhanced Naturalness: By integrating more advanced motion capture data and refining our machine learning techniques, we have significantly improved the naturalness of the lip movements, making the characters’ speech appear more fluid and lifelike.

- Increased Speed and Efficiency: We optimized the model to process videos faster without sacrificing quality, facilitating quicker turnaround times for projects that require large-scale localization.

- User Feedback Incorporation: We actively collected feedback from users of the beta version and incorporated their insights into the development process to address specific issues and enhance overall user satisfaction.

How exactly does our AI model synchronize lip movements with translated audio?

Dima: “Our AI model works by combining the information from the translated audio with information about the person’s face in the frame, and then merges these into the final output. This integration ensures that the lip movements are accurately synchronized with the translated speech, providing a seamless viewing experience”.

What unique features make Premium Lip-Sync ideal for high-quality content?

Dima: “Premium Lip-sync is specifically designed to handle high-quality content through its unique features such as multispeaker capability and high-resolution support. It can process videos up to 2K resolution, ensuring that the visual quality is maintained without compromise. Additionally, the multispeaker feature allows for accurate lip synchronization across different speakers within the same video, making it highly effective for complex productions involving multiple characters or speakers. These features make Premium Lipsync a top choice for creators aiming for professional-grade content”.

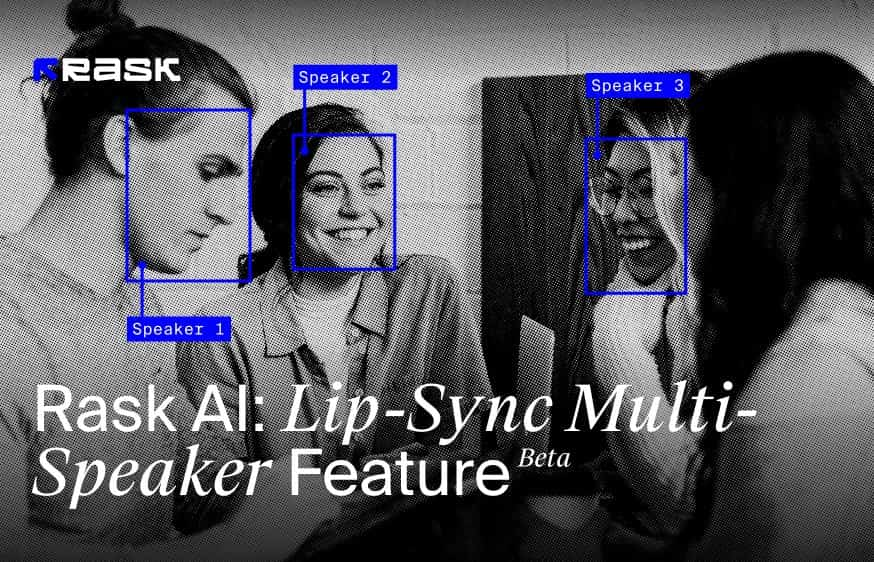

And what is a Lip-Sync Multi-Speaker Feature?

The Multi-Speaker Lip-Sync feature is designed to accurately sync lip movements with spoken audio in videos that feature multiple people. This advanced technology identifies and differentiates between multiple faces in a single frame, ensuring that the lip movements of each individual are correctly animated according to their spoken words.

How Multi-Speaker Lip-Sync Works:

- Face Recognition in Frame: The feature initially recognizes all faces present in the video frame, regardless of the number. It's capable of identifying each individual, which is crucial for accurate lip synchronization.

- Audio Matching: During the video playback, the technology aligns the audio track specifically with the person who is speaking. This precise matching process ensures that the voice and lip movements are in sync.

- Lip Movement Synchronization: Once the speaking individual is identified, the lip-sync feature redraws the lip movements for only the speaking person. Non-speaking individuals in the frame will not have their lip movements altered, maintaining their natural state throughout the video. This synchronization applies exclusively to the active speaker, making it effective even in the presence of off-screen voices or multiple faces in the scene.

- Handling Static Images of Lips: Interestingly, this technology is also sophisticated enough to redraw lip movements on static images of lips if they appear in the video frame, demonstrating its versatile capability.

This Multi-Speaker Lip-Sync feature enhances the realism and viewer engagement in scenes with multiple speakers or complex video settings by ensuring that only the lips of the speaking individuals move in accordance with the audio. This targeted approach helps maintain the focus on the active speaker and preserves the natural dynamics of group interactions in videos.

From just one video, in any language, you can create hundreds of personalized videos featuring various offers in multiple languages. This versatility revolutionizes how marketers can engage with diverse and global audiences, enhancing the impact and reach of promotional content.

How do you balance between quality and processing speed in the new, Premium Lip-sync?

Dima: “Balancing high quality with fast processing speed in Premium Lipsync is challenging, yet we have made significant strides in optimizing our model’s inference. This optimization allows us to output the best possible quality at a decent speed”.

Are there any interesting imperfections or surprises you encountered while training the model?

Additionally, working with occlusions around the mouth area has proven to be quite difficult. These elements require careful attention to detail and sophisticated modeling to achieve a realistic and accurate representation in our lip-sync technology.

How does the ML team ensure user data privacy and protection when processing video materials?

Dima: Our ML team takes user data privacy and protection very seriously. For the Lipsync model, we do not use customer data for training, thus eliminating any risk of identity theft. We solely rely on open-source data that comes with appropriate licenses for training our model. Additionally, the model operates as a separate instance for each user, ensuring that the final video is delivered only to the specific user and preventing any data entanglement.

At our core, we are committed to empowering creators, ensuring the responsible use of AI in content creation, with a focus on legal rights and ethical transparency. We guarantee that your videos, photos, voices, and likenesses will never be used without explicit permission, ensuring the protection of your personal data and creative assets.

We are proud members of The Coalition for Content Provenance and Authenticity (C2PA) and The Content Authenticity Initiative, reflecting our dedication to content integrity and authenticity in the digital age. Furthermore, our founder and CEO, Maria Chmir, is recognized in the Women in AI Ethics™ directory, highlighting our leadership in ethical AI practices.

What are the future prospects for the development of lip-sync technology? Are there specific areas that particularly excite you?

Dima: We believe that our lip-sync technology can serve as a foundation for further development towards digital avatars. We envision a future where anyone can create and localize content without incurring video production costs.

In the short term, within the next two months, we are committed to enhancing our model's performance and quality. Our goal is to ensure smooth operation on 4K videos and to improve functionality with translated videos into Asian languages. These advancements are crucial as we aim to broaden the accessibility and usability of our technology, paving the way for innovative applications in digital content creation.Breaking the language barriers has never been so close! Try our enhanced lip-sync functionality and send us your feedback on this feature.

FAQ

Lip-sync is available on Creator Pro, Archive Pro, Business, and Enterprise plans.

One minute of lip-sync generated equals one minute deducted from your total minutes balance.

Lip-sync minutes are deducted just like when dubbing your videos.

Lip-sync is charged separately from dubbing. For example, to translate and lip-sync a 1-minute video into 1 language, you need 2 minutes.

Before generating lip-sync, you will be able to test 1 free minute to evaluate the quality of the technology.

The speed of lip-sync generation depends on the number of speakers in the video, duration, quality and size of the video.

For example, here are approximate rate of lip-sync generation for different videos:

Videos with one speaker

- 4 minute video 1080p ≈ 29 minutes

- 10 minute 1080p ≈ 2 hours 10 minutes

- 10 minute 4K video ≈ 8 hours

Videos with 3 speakers:

- 10 minute 1080p ≈ 5 hours 20 minutes

- Upload your video via the link from YouTube, Google Drive, or directly upload the file from your device. Choose the target language and click on the translate button.

- Add a voiceover to your video in Rask AI via “Dub video” button.

- To check if your video is compatible with Lip-sync, click the "Lip-sync check" button.

- If it's compatible, proceed by tapping the Lip-sync button.

- Next, select the number of faces you want in your video — either "1" or "2+", then tap "Start Lip-sync". Just a heads up, this is about the number of faces, not speakers.

.jpg)

.webp)

![8 Best Video Translator App for Content Creators [of 2024]](https://rask.ai/cdn-cgi/image/width=960,format=auto,fit=scale-down/https://cdn.prod.website-files.com/63d41bc99674c403e4a7cef7/6668a3dcd3175bd1d1c73c81_Best%20video%20translator%20apps%20cover.webp)

![Best AI Dubbing Software for Video Localization [of 2024]](https://rask.ai/cdn-cgi/image/width=960,format=auto,fit=scale-down/https://cdn.prod.website-files.com/63d41bc99674c403e4a7cef7/66685014f68137eb05c89c16_Cover.webp)

.webp)

Rask%20Lens%20A%20Recap%204.webp)